When OpenAI dropped GPT-OSS, it didn’t feel like a typical product launch. It felt like a seismic shift.

The name itself—GPT-OSS (short for “Open-Source Stack” or “Open-Source Siblings,” depending on who you ask)—signals the change: a powerful, open-weights model optimized for multi-step reasoning, not just chat. And unlike GPT-4, you can actually run it locally. Fine-tune it. Inspect it.

For developers and AI tinkerers who’ve been waiting on a truly usable, open model that doesn’t just mimic GPT, but reasons with the sharpness of GPT-4-level intelligence? This is your moment.

GPT-OSS is a state-of-the-art, open-weight transformer model released by OpenAI in August 2025.

It was trained using OpenAI's best-in-class data pipeline but deliberately released with open weights—a rarity among top-tier models.

Key highlights:

On Reddit’s /r/singularity, one user described GPT-OSS as:

“The first open model that actually thinks—not just talks.”

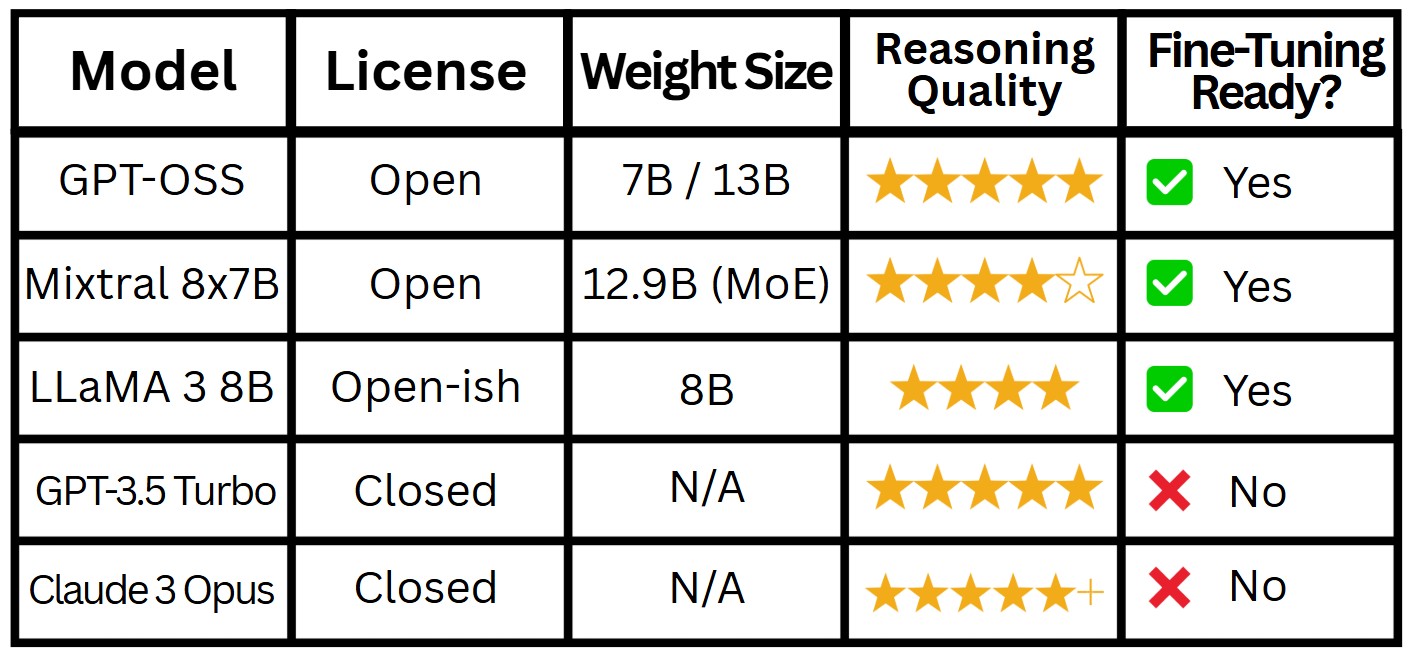

Open-weight models have historically lagged behind proprietary giants in terms of performance, especially on reasoning-heavy tasks like math, logic, and code.

GPT-OSS changes that.

Early benchmarks show:

If you’re building agents, running code interpreters, or working on tool-using LLMs, this is the best base model you can freely use today.

OpenAI didn’t just throw some weights on HuggingFace and call it a day.

GPT-OSS comes with:

It’s a dream for anyone working on agent frameworks, local hosting, or edge devices.

Most open models are trained to “sound smart.” GPT-OSS is trained to be smart.

This model excels at:

You can think of it more like a base for Copilot-style assistants or autonomous agents than a chatbot.

Even though it’s powerful, GPT-OSS is not magic.

Still, it’s the most promising open-weight model for developers who care about reasoning + customization.

GPT-OSS is more than just a nice open model. It’s a signpost.

It shows us that OpenAI doesn't have to mean second-rate. That we can build powerful reasoning systems without a monthly API bill. That we can audit, fine-tune, and deploy intelligence on our own terms.

If you’ve been waiting for a “real” open model to build with? GPT-OSS is it.